Carrying out a View Model Request

April 07, 2023Happy Friday, everyone!

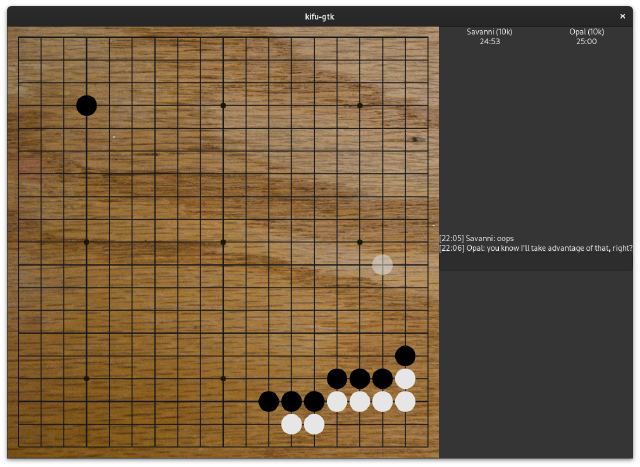

Last week, I talked about view models in my Kifu application. This week, I’ve continued on by actually implementing the most important thing that can happen in a Go application: being able to put a stone on the board.

For this article, let’s talk put this request/response architecture into practice. You can watch me explaining and implementing this in my stream, Putting a stone on the board.

The Request

Let’s review the request itself:

#[derive(Clone, Copy, Debug)]

pub enum IntersectionElement {

Unplayable,

Empty(Request),

Filled(StoneElement),

}

#[derive(Clone, Debug)]

pub struct BoardElement {

pub size: Size,

pub spaces: Vec<IntersectionElement>,

}

#[derive(Clone, Copy, Debug, PartialEq, Eq)]

pub enum Request {

PlayingField,

PlayStoneRequest(PlayStoneRequest),

}

So, when I click on a space with an IntersectionElement::Empty value, the contract between the core and the UI states that the UI should simply dispatch that particular request to the core. Let’s say the request is Request::PlayStoneRequest(PlayStoneRequest{ column: 3, row: 3 }). Here’s what happens:

- The UI dispatches the request to the core, then returns to normal operations.

- The core does whatever processing is necessary. In this case, it involves putting a stone on the board, figuring out what, if any, groups get captured, maybe sending the move across the network to another player, and then building an updated representation of the board.

- The core communicates the updated representation to the UI.

- The UI, upon receiving this message from the core, re-renders itself with the new representation.

Today we will skip all of the magic involved in evaluating the new board state, instead just being naive and placing the stone on the board No Matter What. The question becomes, with a decoupled system, how do we get this flow across application boundaries?

The FFI

This is the FFI that I have written, but it’s important that I emphasized that this is customized for a Rust GTK application. GTK is not thread-safe, and Rust represents this by not allowing any components to be shared across thread boundaries (components are not Sync or Send).

Additionally, it is convenient to have all GTK processing occur in the main loop of the application. Since my core code is inherently asynchronous, the easiest tool I could reach for is the custom channel type that GTK-RS provides:

move |app| {

let (gtk_tx, gtk_rx) = gtk::glib::MainContext::channel::<Response>(gtk::glib::PRIORITY_DEFAULT);

...

}

Rust channels, including this custom one, are Multi-Producer-Single-Consumer, and in Rust we enforce this by allowing the sending channel to be cloned as much as we want, but not allowing the receiver to be cloned. Only one process can own and process messages that arrive on the receiver, but anything can send to that receiver. So I’m using the above channel to allow core operations to send their results back to my UI, and my UI just processes those results as they arrive.

move |app| {

let (gtk_tx, gtk_rx) = gtk::glib::MainContext::channel::<Response>(gtk::glib::PRIORITY_DEFAULT);

...

gtk_rx.attach(None, move |message| {

match message {

Response::PlayingFieldView(view) => /* do everything necessary to update the current UI */

}

});

And then on the other side, I have the function that dispatches requests into the core:

#[derive(Clone)]

pub struct CoreApi {

pub gtk_tx: gtk::glib::Sender<Response>,

pub rt: Arc<Runtime>,

pub core: CoreApp,

}

impl CoreApi {

pub fn dispatch(&self, request: Request) {

self.rt.spawn({

let gtk_tx = self.gtk_tx.clone();

let core = self.core.clone();

async move {

let response = core.dispatch(request).await

gtk_tx.send(response).await

}

});

}

}

Dispatch spawns off a new asynchronous operation that is going to handle all of the processing. Whatever calls dispatch, is now free to continue doing whatever it needs to do. UI elements generally cannot wait around for a response, so I don’t even give them the option to. Under the hood, though, this task will send a request to the core, await the response, and then send the response to the gtk_tx channel, the one I defined above.

The Core dispatch function looks pretty pedestrian. You could find something like this in the server side of any client/server application that references a global shared state.

pub async fn dispatch(&self, request: Request) -> Response {

match request {

Request::PlayStoneRequest(request) => {

let mut app_state = self.state.write().unwrap();

app_state.place_stone(request);

let game = app_state.game.as_ref().unwrap();

Response::PlayingFieldView(playing_field(game))

}

...

}

}

Looking to Kotlin

I’ve never written more than a few trivial lines of Kotlin and an Android application. I have a lot in front of me on that topic. The FFI that I wrote above cannot work on Android. GTK does not exist there, so the communication channel cannot work. CoreApi is a class that I put into the GTK application, not in the Core. While I do not yet know what the Android FFI is going to look like, I actually imagine that I’m going to build a library that provides Android bindings and internally manages the runtime executor.

Wrapping up

What I showed here is a mechanical nuts-and-bolts illustration of a basic concept:

- The UI doesn’t know what requests it has. It simply knows, by contract, that when the user clicks in a place, the UI needs to find the request associated with that space and send it to the core. It does not wait for a response, because there is no way to know how long the request will take to resolve, and the UI must remain responsive. This is put into the definition of the particular UI element.

- The dispatch function creates an async task for processing the request.

- The dispatch function also knows how to send the response back to the UI. The GTK UI has one place in which it can process all such asynchronous signals.

Over the next few weeks, I face the task of implementing the rules of Go, and also setting up an Android toolchain.